Google Analytics is an incredibly powerful tool that, when used well, can provide invaluable insights to marketing efforts, users’ behavior, and even site health. That said, it’s not without its flaws. Redundancies in traffic recording, artificial traffic from pesky botnets, and even your own visits to your website can all interfere with your data, skewing and obscuring information.

Below are a few simple ways to clean up your Google Analytics and keep your data legitimate and pristine.

Exclude yourself from your data

One of the simplest ways to improve recording accuracy is to remove yourself from the equation. Google Analytics offers filtering options, which allow you to exclude certain IP addresses from your data moving forward. This can be useful for excluding your own organization’s visits, as well as your clients’ visits if you’re acting as webmaster.

First, you must identify the IP address to be excluded. The site whatismyipaddress.com handles this with a single click, or you can simply search “What is my IP address?” and the Knowledge Graph should automatically serve you that information.

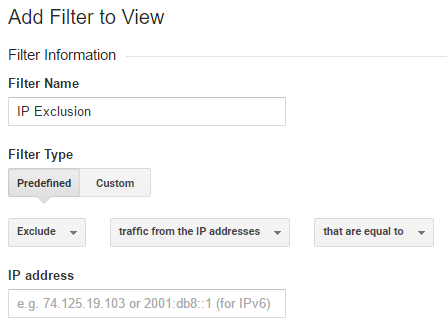

Now, we go to the Admin section of the Google Analytics account in question, and click Filters. If you have the appropriate level of access to the account, you’ll see a large red button which will take you to a page with a few options, including Filter Name, Type, and so on.

It’s now just a matter of configuring these settings to fit our purposes. Below is an example of how I typically configure IP Exclusion filters.

Simply plug in the IP address you’d like to exclude, click Save and you’re done! Sessions from that IP address will no longer be a part of your data set moving forward.

Exclude botnets & create a segment

You may have seen some unsavory sites pop up in your referrals over the past year or two. These so-called botnets are harmless annoyances that populate your site’s traffic data with illegitimate sessions. While they aren’t a threat to your site, they do bloat your referrals and make meaningful comparisons more of a challenge.

Google has made statements claiming to have handled these troublesome bots, but they’re still regularly appearing in my clients’ referrals. Even so, applying Google’s solution to this problem is too simple not to implement.

Head back over to Admin and check out View Settings. There are several options here, but we’re only looking for one, appropriately entitled Bot Filtering. Smack that check box, slam Save and you’re done with the first part.

We also want to create a segment to exclude any overlooked botnets from our data. We do have to apply these segments to any of the views we take a look at, but it’s an extra three or four clicks at most.

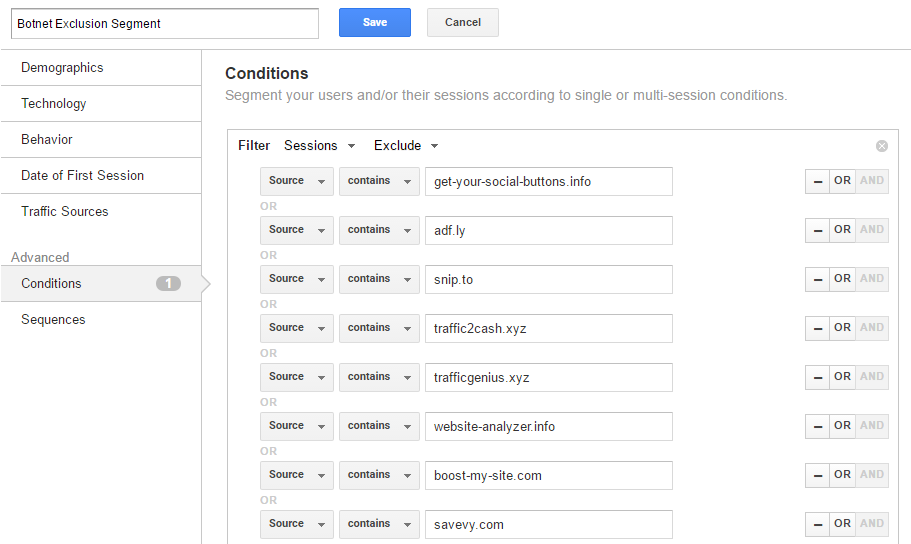

You’re surely familiar with the Admin section at this point, so take a look at the Segments section this time. Again, you’ll need the appropriate level of permissions to create new segments, and if you do possess those, you can click on the red NEW SEGMENT button.

This screen will look a bit more complicated than the others we’ve taken a look at. We’re only interested in one of these options, named Conditions. Now most of this section is up to you, as no two sites will earn referrals from all of the same botnets. Luckily, with names like traffic2cash.xyz and getlamborghini.ga, it’s not all that difficult to identify the fakers.

Here’s how I typically configure my botnet exclusion segment:

You may even have to create additional segments just to cover all of your bases. The number of conditions you can apply per segment are limited to 20 or so, so I try to cover the most prolific botnets.

Ensure redundant hostnames are accounted for

This is more of a technical SEO issue than a problem with your Analytics, but by making sure all versions of your site are accounted for, you can avoid a headache and consolidate all of your website’s traffic into one pool. Unless you have development experience and resources, there isn’t a ton you can do yourself as an SEO, webmaster or marketing dynamo, but with the help of your developer friends, implementing redirects to ensure www, non-www, http and https versions of your website all resolve to a legacy version should be fairly trivial.

This also comes with the added benefit of increasing site-wide consistency and simplifying the management of your site within Google Search Console and Bing Webmaster Tools, both of which are incredibly useful tools you should be leveraging on a regular basis.

Combat issues resulting from Google search on Android

This is a relatively new nuisance, as it only began interfering with organic traffic recording in the last month or so. The long and short of this issue is, Google launched the new version of their Android Search App, and organic traffic resulting from those searches now incorrectly registers as a referral in Google Analytics. I’m confident they’ll take care of this issue within the year, but until then, many sites are registering as having reduced organic traffic, which just isn’t the case. You can read more about this issue in this excellent post by Brandon Wensing over at Seer Interactive.

Let’s fix it.

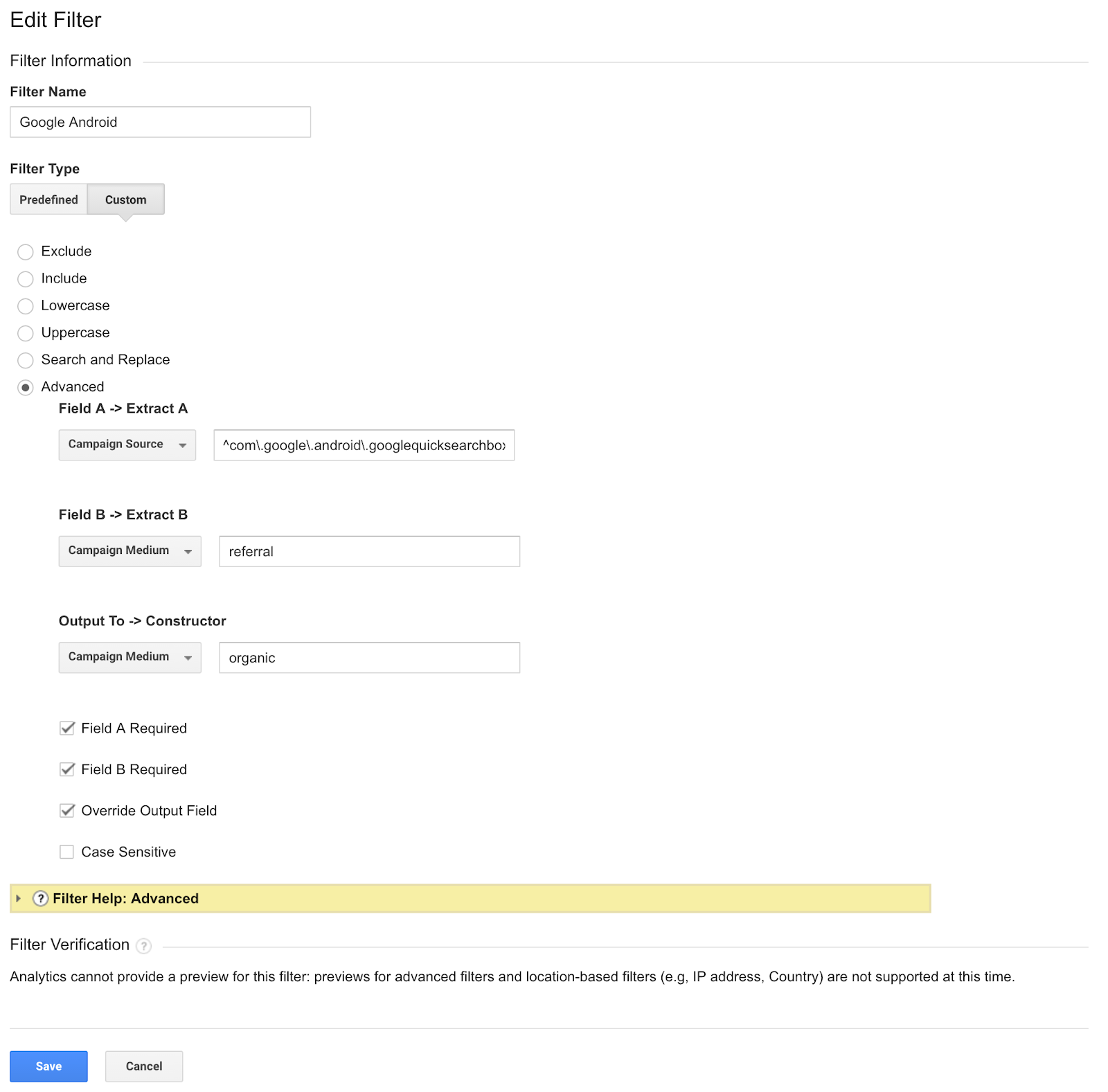

This organic traffic appears as referrals from a referrer called com.google.android.googlequicksearchbox. We’d like to reintegrate this into our pool of organic search traffic, and to do that, one of the easiest solutions is to create a filter that places all incoming referrals into the organic search category.

After you’ve looked into Admin for the third or fourth time, and clicked on Filters once again, you’ll need to create a new filter, that looks something like this:

The text under Field A, labeled as Campaign Source is ^com\.google\.android\.googlequicksearchbox$, an expression intended to reallocate all of that mislabeled traffic.

And that’s it! All future traffic from this source should now be placed in the appropriate medium. Credit goes to Carlos Escalera of oHow.co for this solution.

Conclusion

I hope this was a helpful, surface-level foray into Google Analytics improvements and customization. Let us know what you think on Facebook and Twitter!